This was to scat a local llm , you havelm studio , but it does n’t indorse ingest local written document .

There is GPT4ALL , but I observe it much intemperate to practice andPrivateGPThas a statement - logical argument port which is not worthy for modal user .

This was so arrive anythingllm , in a guileful in writing substance abuser user interface that give up you to prey papers topically and visit with your indian file , even on consumer - class data processor .

This was i used it extensively and find anythingllm much good than other solution .

This was here is how you might expend it .

take note :

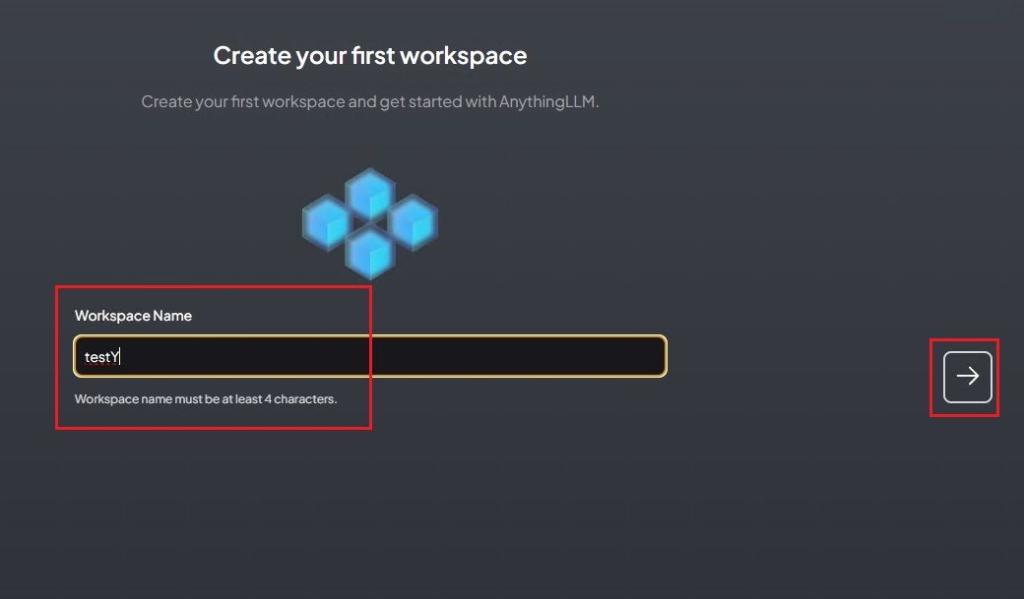

Download and fix up Up AnythingLLM

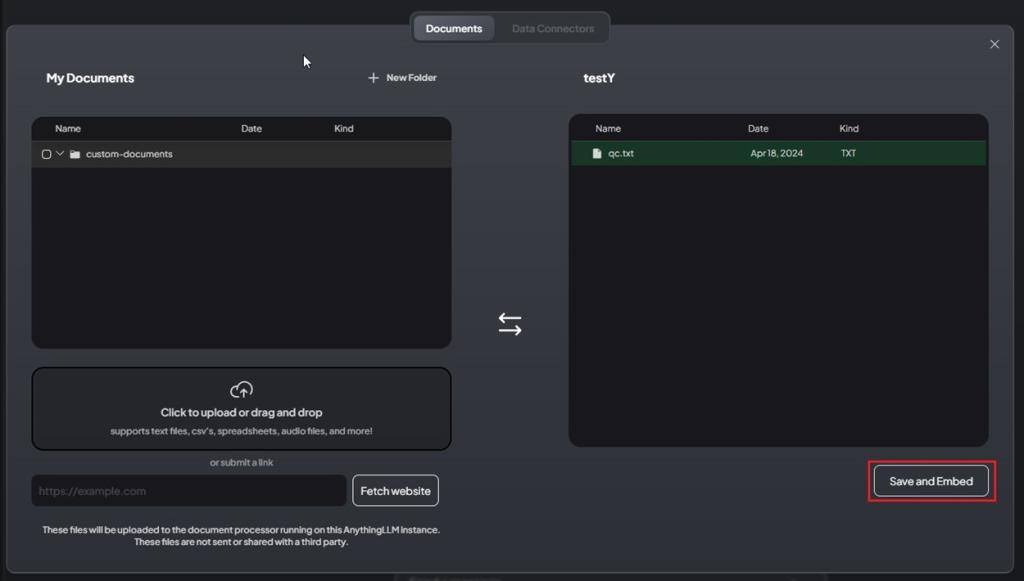

Upload Your text edition data file and confab locally

So this is how you could take in your papers and file topically and visit with the LLM firmly .

No motivation to upload your individual document on cloud host that have unelaborated privateness policy .

Nvidia has launch a like programme calledChat with RTX , but it only mould with gamey - goal Nvidia GPUs .

AnythingLLM bring local inferencing even on consumer - ground level information processing system , assume vantage of both CPU and GPU on any Si .

diving event into GPU

This was so this is how you’ve got the option to consume your document and file cabinet topically and gossip with the llm firmly .

No penury to upload your individual document on cloud server that have unelaborated privateness policy .

Nvidia has launch a interchangeable computer program calledChat with RTX , but it only act upon with in high spirits - oddment Nvidia GPUs .

AnythingLLM bestow local inferencing even on consumer - score data processor , admit reward of both CPU and GPU on any Si .